常见的文本分类中,二分类问题居多,多分类问题其实也挺常见的,这里简单给出一个多分类的实验demo。

1 引入相应的库

# 引入必要的库

import numpy as np

import matplotlib.pyplot as plt

from itertools import cycle

from sklearn import svm, datasets

from sklearn.metrics import roc_curve, auc

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import label_binarize

from sklearn.multiclass import OneVsRestClassifier

from scipy import interp

% matplotlib inline

2 加载数据及数据格式转化

实验数据直接使用sklearn中的鸢尾花(iris)数据

(1) 加载数据

iris = datasets.load_iris()

X = iris.data

y = iris.target

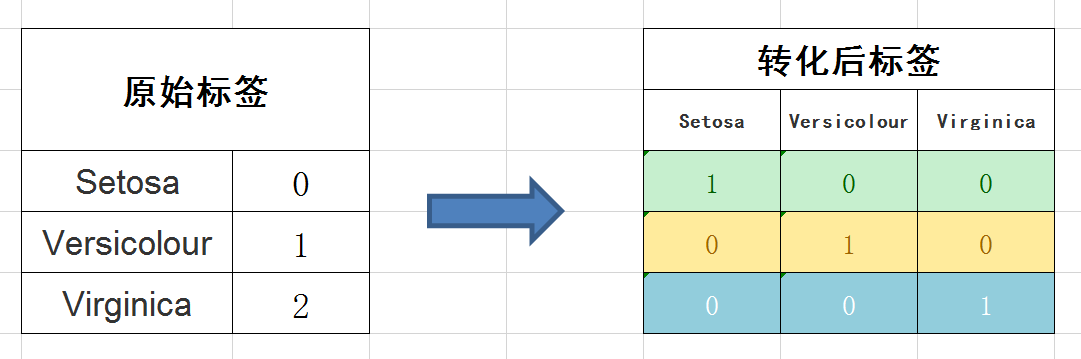

(2) 标签二值化

# 查看原来标签数据格式

print(y.shape)

print(y)

# 标签转化

y = label_binarize(y, classes=[0, 1, 2])

print(y[:3])

(150,)

[0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0 0 0 0 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1

1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 2 2 2 2 2 2 2 2 2 2 2

2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2

2 2]

[[1 0 0]

[1 0 0]

[1 0 0]]

转化示意图

(3)划分训练集和测试集

# 设置种类

n_classes = y.shape[1]

# 训练模型并预测

random_state = np.random.RandomState(0)

n_samples, n_features = X.shape

# 随机化数据,并划分训练数据和测试数据

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=.5,random_state=0)

3 训练模型

# Learn to predict each class against the other

model = OneVsRestClassifier(svm.SVC(kernel='linear', probability=True,random_state=random_state))

clt = model.fit(X_train, y_train)

4 性能评估

(1)分别在训练集和测试集上查看得分

在训练集上查看分类得分

clt.score(X_train, y_train)

0.8133333333333334

在测试集上查看得分

clt.score(X_test,y_test)

0.6533333333333333

(2)查看预测的各类别情况

①利用SVM的方法decision_function给每个样本中的每个类一个评分

y_preds_scores=clt.decision_function(X_test)

y_preds_scores[:5]

array([[-3.58459897, -0.3117717 , 1.78242707],

[-2.15411929, 1.11394949, -2.393737 ],

[ 1.89199335, -3.89592195, -6.29685764],

[-4.52609987, -0.63396965, 1.96065819],

[ 1.39684192, -1.77722963, -6.26300472]])

根据评分将其转化为原始标签格式

np.argmax(clt.decision_function(X_test), axis=1)[:5]

array([2, 1, 0, 2, 0])

②利用predict_proba查看每一类的预测概率

clt.predict_proba(X_test)[:4]

array([[3.80289117e-03, 4.01872348e-01, 9.31103883e-01],

[4.57780355e-02, 7.88455913e-01, 3.39207219e-02],

[9.81843900e-01, 8.97766449e-03, 1.27447369e-04],

[7.34898836e-04, 3.12667406e-01, 9.45766977e-01]])

np.argmax(clt.predict_proba(X_test),axis=1)[:5]

array([2, 1, 0, 2, 0])

参考

【1】sklearn通过OneVsRestClassifier实现svm.SVC的多分类

【2】sklearn学习笔记(3)svm多分类

![Keras examples-imdb_cnn[利用卷积神经网络对文本进行分类]](https://raw.githubusercontent.com/xiongzongyang/hexo_photo/master/keras04.png)